In the domain of hardware development, many industry professionals aren't even aware of the existence of a thing such as Reliability Analysis. Their typical development process involves relying on many of the tools and strategies that are common knowledge nowadays in engineering, which are absolutely necessary for a good design but tend to not be enough. We are talking about CAD software, Finite Element Analysis, rapid prototyping, electronic circuit simulations, iterative improvement cycles, systems simulations, and more. These have worked well in the past for many companies and will continue to work in the future, but they will not be sufficient for those that aim to be cutting-edge and industry referents, as their competitors are already gearing up and have normalized reliability analysis into their standard development practices.

Design optimization

As many will know, designing a component or a system is not purely driven by maximizing performance, as if that were the case, everything would be manufactured in some precious space-grade metal or similar. Instead, it consists of a subtle balance between many variables, which include performance, cost, availability, manufacturing throughput, supplier relationships, and more. In many cases, we have room to play with one or two of these variables to make the overall best product possible, and we do so being careful not to exceed the minimum requirements for any of them.

When it comes to optimizing the level of performance, we tend to overshoot and be conservative, which I think is good and necessary, as long as we are not extremely conservative. Sometimes, this safety factor covers uncertainty related to how the user is going to operate the system, unawareness of some system interactions, and so on, and at other times, it's just driven by fear and intuition. The key to an optimal design is to identify the uncertainties, minimize them, and make the rest of your decisions data-driven.

A simple yet effective way to implement this is to create a design that is considered sufficiently good for the use specifications set for our product, fabricate a batch of ten or twenty units, or more if possible, and start a stress test as soon as possible. Whenever the samples have failed, you'll be able to pinpoint one or more weak points of your design and determine, with statistical evidence, the limits of your design, which will help you adjust your design (if necessary) in a quantitative way.

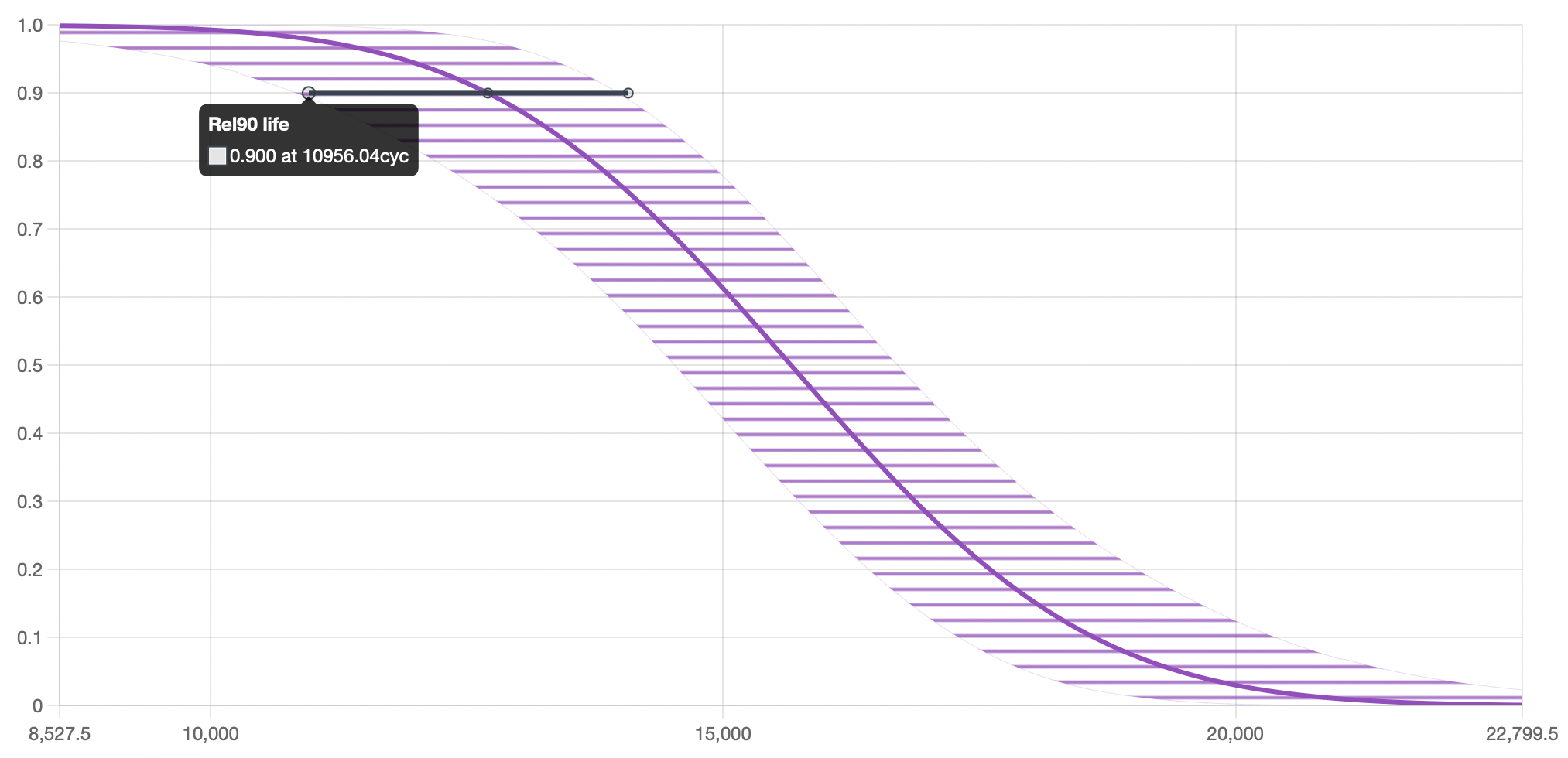

In the following image, we see the results of a test with a reduced number of units that show how the most conservative estimation of the 90% reliability of a system is reached at a little below 11,000 cycles. This information can be used to decide if a redesign is necessary, if further tests are required, or if it's good to go.

Quantitative comparisons

It is also frequent to need to compare two or more elements or systems that are intended to be part of our product, and a quantitative evaluation is needed to make a decision. This is not just true in the context of optimization, as discussed above, where option A may have higher durability than option B but also be pricier and potentially an overkill for our purposes. It is also a way of comparing apples to oranges in a way that makes sense.

Imagine a case in which your team is deciding between two different approaches to solving one of the requirements your product has, and they are so different that they are hard to compare. Both have their pros and cons, and both would be a proper solution. Typically, the price comparison between the two solutions would be the tiebreaker, but there are cases in which that's a difficult thing to assess too. In some instances, the component's price is lower for one option, but its costs associated with assembly, quality control, maintenance, etc. even it out with the other. In this case, it could be a good idea to fabricate a bunch of units of each option, run a durability test, and statistically characterize their reliability curves to be used as a deciding factor. In cases like this, it's great to have a tool like Broadstat that simplifies the calculation of the probability distributions of your contending alternatives. And remember, it would be a huge mistake to test one unit of each option and declare the one that lasts longer as the winner if what you want is an evaluation based on reliability, as there would not be any statistical basis behind such a claim, and conclusions drawn from such an experiment are not sound.

Cost reduction

It is a standard practice among hardware development companies to release a first version of a product for which there is unrealized potential with regards to cost savings. When one is competing in a fierce market and is trying to position oneself as an innovation leader in the industry, development timings tend to be shorter than ideal so that there can be a chance to be the "first to market." This means that future evolutions of such a product tend to comprise more mature versions of its previous components, and also better deals are made with suppliers once the market demand is clearer, all of which leads to lower production costs.

These evolutions of a product are a great opportunity to optimize its cost but need to be made ensuring its quality is not compromised. What makes this scenario ideal for a reliability study is that the first version of the product, which is already being mass-produced, is cheaper to manufacture for a test than one coming from a small batch of prototypes. This lets you set up a test with a larger sample size that will yield more accurate results and will be used as your quality benchmark. The new variations can then be subjected to new reliability tests, the results of which can be compared to those of the first version to quantitatively ensure your cost-saving efforts are not affecting the performance of the product.

Sales, marketing and brand

It is hard enough to make the best product possible, as we've seen in the previous sections of this post, to even start worrying about marketizing and selling it, but the reality is that no matter how great your product is, if it is not selling properly all your efforts may sadly be wasted. And it is not always an easy task: salespeople sometimes have a hard time trying to sell a product over a sea of options from competitors that seem like a copy of yours, even when they're not. I can't emphasize enough how important it can be to have a unique selling point that others don't, enough to differentiate your product and catch your potential client's attention. If you add reliability information to your product's selling specifications, you'll put yourself in an advantageous position; and if your competitors are already doing it and you don't, you better start soon. Not only that, but making sure the reliability of your product is one of your main concerns will eventually transpire to your brand's identity, which will be perceived as that of a trustworthy product manufacturer.

Conclusion

Reliability analysis is nowadays an indispensable tool that every product development team should be putting their eyes into, as they were decades ago CAD and FEA, and as will also be the different integrations of Artificial Intelligence. And just to be clear, this discipline has been around for decades and has been subject of study of many researchers. The difference between now and then is that it used to be necessary to teach oneself a whole new branch of statistics or attend trainings in order to use very complex and expensive pieces of software. Now, a tool like Broadstat can get you to obtain actionable, useful data in a couple of hours without the steep learning curve. So there is no excuse! Go now and start your first reliability project, and avoid falling behind.